What is Kubernetes?

Kubernetes, also known as K8s is an open-source container management tool that automates container deployment, scaling & load balancing. It schedules, runs, and manages isolated containers that are running on virtual/physical/cloud machines. All top cloud providers support Kubernetes.

It was developed by Google in 2014 and is now maintained by the Cloud Native Computing Foundation (CNCF).

Features of Kubernetes

Container orchestration:

High availability

Load balancing

Self-healing

Automatic scaling

Rollouts and rollbacks

Configuration management

Secret management

Extensibility

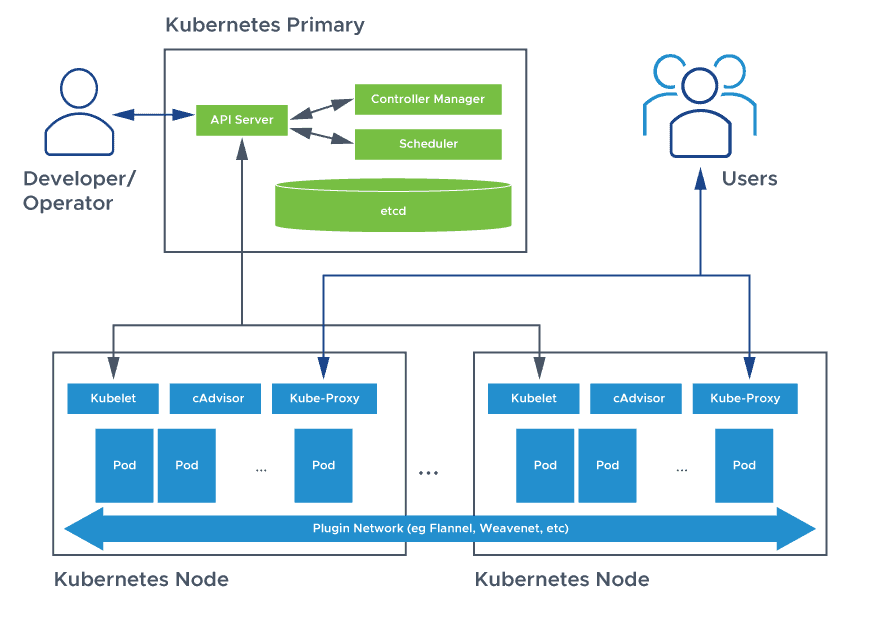

Kubernetes Architecture & Components

The Kubernetes architecture is based on a master-slave model, where the master node manages the entire cluster while the worker nodes host the containers.

Kubernetes control plane:- manages Kubernetes clusters and the workloads running on them. Include components like the API Server, Scheduler, and Controller Manager.

Kubernetes data plane:- machines that can run containerized workloads. Each node is managed by the kubelet, an agent that receives commands from the control plane.

Pods:- pods are the smallest unit provided by Kubernetes to manage containerized workloads. A pod typically includes several containers, which together form a functional unit or microservice.

Persistent storage:- local storage on Kubernetes nodes is ephemeral and is deleted when a pod shuts down. This can make it difficult to run stateful applications. Kubernetes provides the Persistent Volumes (PV) mechanism, allowing containerized applications to store data beyond the lifetime of a pod or node. This is part of an extensive series of guides about CI/CD.

Control plane components

API Server

API server exposes the Kubernetes API.

Entry point for REST/kubectl. It is the front end for the Kubernetes control plane.

It tracks the state of all cluster components and manages the interaction between them.

It is designed to scale horizontally.

It consumes YAML/JSON manifest files.

It validates and processes the requests made via API.

Scheduler

It schedules pods to worker nodes.

It watches API-server for newly created Pods with no assigned node and selects a healthy node for them to run on.

If there are no suitable nodes, the pods are put in a pending state until such a healthy node appears.

It watches API Server for new work tasks.

Controller Manager

It watches the desired state of the objects it manages and watches their current state through the API server.

It takes corrective steps to make sure that the current state is the same as the desired state.

It is the controller of controllers.

It runs controller processes. Logically, each controller is a separate process, but to reduce complexity, they are all compiled into a single binary and run in a single process.

etcd

It is a consistent, distributed, and highly-available key-value store.

It is stateful, persistent storage that stores all of Kubernetes cluster data (cluster state and config).

It is the source of truth for the cluster.

It can be part of the control plane, or, it can be configured externally.

Worker node components

A worker node runs the containerized applications and continuously reports to the control plane's API-server about its health.

Kubelet

It is an agent that runs on each node in the cluster.

It acts as a conduit between the API server and the node.

It makes sure that containers are running in a Pod and they are healthy.

It instantiates and executes Pods.

It watches API Server for work tasks.

It gets instructions from the master and reports back to Master.

Kube-proxy

It is a networking component that plays a vital role in networking.

It manages IP translation and routing.

It is a network proxy that runs on each node in cluster.

It maintains network rules on nodes. These network rules allow network communication to Pods from inside or outside of the cluster.

It ensures each Pod gets a unique IP address.

It makes possible that all containers in a pod share a single IP.

It facilitates Kubernetes networking services and load-balancing across all pods in a service.

It deals with individual host sub-netting and ensures that the services are available to external parties.

Container runtime

The container runtime is the software that is responsible for running containers (in Pods).

To run the containers, each worker node has a container runtime engine.

It pulls images from a container image registry and starts and stops containers.

Kubernetes Installation and Configuration

- Firstly launch 2 EC2 instances of t2medium 2vCPU, name as master and worker

- Install docker on both instances

sudo apt-get update

sudo apt install docker.io -y

sudo systemctl start docker

sudo systemctl enable docker

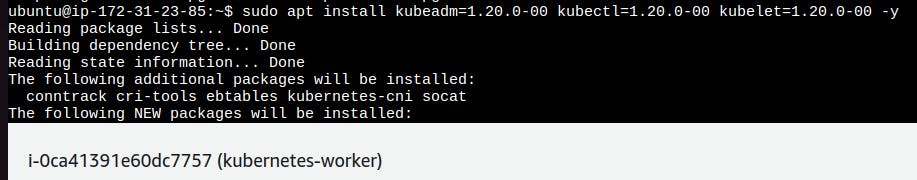

- Install Kubeadm, Kubectl and Kubelet on both the master and worker node

sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt update -y

sudo apt install kubeadm=1.20.0-00 kubectl=1.20.0-00 kubelet=1.20.0-00 -y

- Configure the master node by using these commands

sudo su

kubeadm init

#run the following as a regular user

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#Alternatively, if you are the root user, you can run

export KUBECONFIG=/etc/kubernetes/admin.conf

kubectl apply -f https://github.com/weaveworks/weave/releases/download/v2.8.1/weave-daemonset-k8s.yaml

kubeadm token create --print-join-command

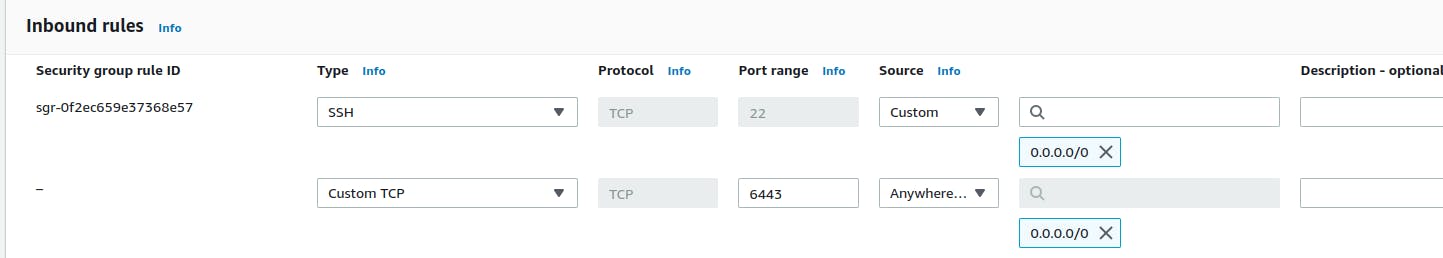

Add port 6443 in inbound rules of master instances before connecting to worker node

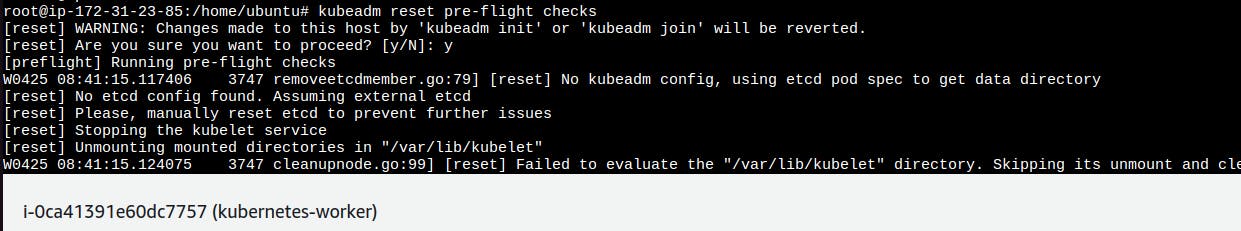

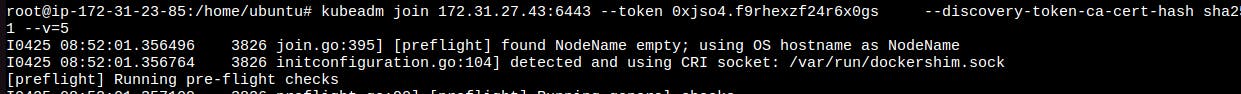

- Configure the worker node by using these commands

sudo su

kubeadm reset pre-flight checks

-----Join command on worker node with `--v=5`

- Check the connecting nodes by using this command on the master node

kubectl get nodes

Thank you for reading!!